Blog

Learn everything from basic SEO to advanced optimisation strategies with our library of SEO articles. From the latest SEO news and industry insights to thought leadership pieces and research studies.

Featured

Never miss a post

Join our mailing list and have our SEO news delivered straight to your inbox.

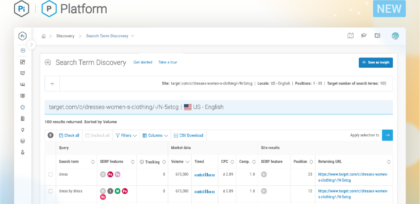

Get ready to discover untapped SEO opportunities